Application in organizations

In practice, controlled autonomy might involve delegated decision-making authority to AI project teams, but with mandatory compliance to risk assessment protocols, ethical guidelines and regulatory requirements. For example, an organization may allow its AI team to choose algorithms and data sources, but require regular reports and audits to ensure transparency and accountability. Automated systems may operate independently, yet their outputs are monitored for biases, errors or security vulnerabilities.

Magesh Kasthuri

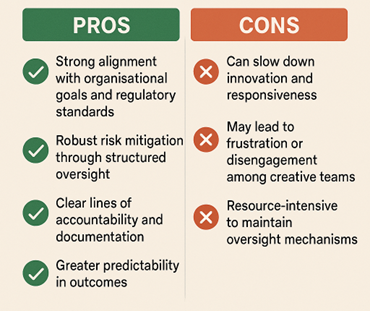

Benefits

- Ensures consistency with organizational objectives and regulatory frameworks

- Reduces the risk of unethical or unsafe AI behaviors

- Facilitates traceability and accountability for AI-driven decisions

- Supports compliance with data privacy and security requirements

Challenges

- Potential for slower innovation due to rigorous oversight

- May lead to bureaucratic delays and reduced agility

- Risk of stifling creative problem-solving among AI teams

- Requires substantial resources for monitoring and enforcement

Exploring guarded freedom

Guarded freedom in AI governance represents a more flexible approach, where AI systems and teams operate with considerable independence, but still within a framework of essential safeguards. The “freedom” is “guarded” by core principles, ethical standards and minimal but effective controls. This model emphasizes trust in the expertise of AI professionals while maintaining a safety net to prevent critical risks.

Application in organizations

Organizations embracing guarded freedom may empower AI teams to explore innovative solutions and rapidly iterate, intervening only at key stages or when certain thresholds are crossed. For instance, a company might permit the use of experimental algorithms without prior approval, provided that all projects undergo a final ethical and compliance review before deployment. The focus is on enabling creativity and adaptability with safeguards in place to catch major issues.